Friday, December 30, 2022

Tuesday, December 27, 2022

The goals of code quality

Code should work

This one is so obvious that it probably doesn’t need stating, but I’ll go ahead and say it anyway. When we write code, we are trying to solve a problem, such as implementing a feature, fixing a bug, or performing a task. The primary aim of our code is that it should work: it should solve the problem that we intend it to solve. This also implies that the code is bug free, because the presence of bugs will likely prevent it from working properly and fully solving the problem.

When defining what code “working” means, we need to be sure to actually capture all the requirements. For example, if the problem we are solving is particularly sensitive to performance (such as latency, or CPU usage), then ensuring that our code is adequately performant comes under “code should work,” because it’s part of the requirements. The same applies to other important considerations such as user privacy and security.

Code should keep working

Code “working” can be a very transient thing; it might work today, but how do we make sure that it will still be working tomorrow, or in a year’s time? This might seem like an odd question: “if nothing changes, then why would it stop working?”, but the point is that stuff changes all the time:

- Code likely depends on other code that will get modified, updated, and changed.

- Any new functionality required may mean that modifications are required to the code.

- The problem we’re trying to solve might evolve over time: consumer preferences, business needs, and technology considerations can all change.

Code that works today but breaks tomorrow when one of these things changes is not very useful. It’s often easy to create code that works, but a lot harder to create code that keeps working. Ensuring that code keeps working is one of the biggest considerations that software engineers face, and is something that needs to be considered at all stages of coding. Considering it as an afterthought, or just assuming that adding some tests later on will achieve this are often not effective approaches.

Code should be adaptable to changing requirements

It’s actually quite rare that a piece of code is written once and then never modified again. Continued development on a piece of software can span several months, usually several years, and sometimes even decades. Throughout this process requirements change:

- business realities shift

- consumer preferences change

- assumptions get invalidated

- new features are continually added

Deciding how much effort to put into making code adaptable can be a tricky balancing act. On the one hand, we pretty much know that the requirements for a piece of software will evolve over time (it’s extremely rare that they don’t). But on the other hand, we often have no certainty about exactly how they will evolve. It’s impossible to make perfectly accurate predictions about how a piece of code or software will change over time. But just because we don’t know exactly how something will evolve, it doesn’t mean that we should completely ignore the fact that it will evolve. To illustrate this, let’s consider two extreme scenarios:

- Scenario A — We try to predict exactly how the requirements might evolve in the future and engineer our code to support all of these potential changes. We will likely spend days or weeks mapping out all the ways that we think the code and software might evolve. We’ll then have to carefully deliberate every minutia of the code we write to ensure that it supports all of these potential future requirements. This will slow us down enormously; a piece of software that might have taken 3 months to complete might now take a year or more to complete. And at the end of it, it will probably have been a waste of time because a competitor will have beaten us to the market by several months, and our predictions about the future will probably turn out to be wrong anyway.

- Scenario B — We completely ignore the fact that the requirements might evolve. We write code to exactly meet the requirements as they are now and put no effort into making any of the code adaptable. Brittle assumptions get baked all over the place and solutions to subproblems are all bundled together into large inseparable chunks of code. We get the first version of the software launched within three months, but the feedback from the initial set of users makes it clear that we need to modify some of the features and add some new ones if we want the software to be successful. The changes to the requirements are not massive, but because we didn’t consider adaptability when writing the code, our only option is to throw everything away and start again. We then have to spend another three months rewriting the software, and if the requirements change again, we’ll have to spend another three months rewriting it again after that. By the time we’ve created a piece of software that actually meets the users’ needs, a competitor has once again beaten us to it.

Scenario A and scenario B represent two opposing extremes. The outcome in both scenarios is quite bad and neither is an effective way to create software. Instead, we need to find an approach somewhere in the middle of these two extremes. There’s no single answer for which point on the spectrum between scenario A and scenario B is optimal. It will depend on the kind of project we’re working on and on the culture of the organization we work for.

Code should not reinvent the wheel

When we write code to solve a problem, we generally take a big problem and break it down into many subproblems. For example, if we were writing some code to load an image file, turn into a grayscale image, and then save it again, the subproblems we need to solve are:

- Load some bytes of data from a file

- Parse the bytes of data into an image format

- Transform the image to grayscale

- Convert the image back into bytes

- Save those bytes back to the file

Many of these problems have already been solved by others, for example loading some bytes from a file is likely something that the programming language has built in support for. We wouldn’t go and write our own code to do low-level communication with the file system.

Similarly, there is probably an existing library that we can pull in to parse the bytes into an image. If we do write our own code to do low-level communication with the file system or to parse some bytes into an image, then we are effectively reinventing the wheel. There are several reasons why it’s best to make use of an existing solution over reinventing it:

- It saves a lot of time — If we made use of the built-in support for loading a file, it’d probably take only a few lines of code and a few minutes of our time. In contrast, writing our own code to do this would likely require reading numerous standard documents about file systems and writing many thousands of lines of code. It would probably take us many days if not weeks.

- It decreases the chance of bugs — If there is existing code somewhere to solve a given problem, then it should already have been thoroughly tested. It’s also likely that it’s already being used in the wild, so the chance of the code containing bugs is lowered, because if there were any, they’ve likely been discovered and fixed already.

- It utilizes existing expertise — The team maintaining the code that parses some bytes into an image are likely experts on image encoding. If a new version of JPEGencoding comes out, then they’ll likely know about it and update their code. By reusing their code, we benefit from their expertise and future updates.

- It makes code easier to understand — If there is a standardized way of doing something then there’s a reasonable chance that another engineer will have already seen it before. Most engineers have probably had to read a file at some point, so they will instantly recognize the built-in way of doing that and understand how it functions. If we write our own custom logic for doing this, then other engineers will not be familiar with it and won’t instantly know how it functions.

The concept of not reinventing the wheel applies in both directions. If another engineer has already written code to solve a subproblem, then we should call their code rather than writing our own to solve it. But similarly, if we write code to solve a subproblem, then we should structure our code in a way that makes it easy for other engineers to reuse, so they don’t need to reinvent the wheel.

The same classes of subproblems often crop up again and again, so the benefits of sharing code between different engineers and teams are often realized very quickly.

Monday, December 26, 2022

Types of Code

- Code base — the repository of code from which pieces of software can be built. This will typically be managed by a version control system such as git, subversion, perforce, etc.

- Submitting code — sometimes called “committing code”, or “merging a pull request”. A programmer will typically make changes to the code in a local copy of the code base. Once they are happy with the change, they will submit it to the main code base. Note: in some setups, a designated maintainer has to pull the changes into the code base, rather than the author submitting them.

- Code review — many organizations require code to be reviewed by another engineer before it can be submitted to the code base. This is a bit like having code proofread, a second pair of eyes will often spot issues that the author of the code missed.

- Pre-submit checks — sometimes called “pre-merge hooks”, “pre-merge checks”, or “pre-commit checks”. These will block a change from being submitted to the code base if tests fail, or if the code does not compile.

- A release — a piece of software is built from a snapshot of the code base. After various quality assurance checks, this is then released “into the wild”. You will often hear the phrase “cutting a release” to refer to the process of taking a certain revision of the code base and making a release from it.

- Production — this is the proper term for “in the wild” when software is deployed to a server or a system (rather than shipped to customers). Once software is released and performing business-related tasks it is said to be “running in production”

Sunday, December 11, 2022

Sunday, December 04, 2022

Sunday, October 23, 2022

The concept of probability for discrete variables can be extended to that of a probability density p(x) over a continuous variable x and is such that the probability of x lying in the interval (x, x+δx) is given by p(x)δx for δx → 0. The probability density can be expressed as the derivative of a cumulative distribution function P(x).

An illustration of a distribution over two variables, X, which takes 9 possible values, and Y , which takes two possible values. The top left figure shows a sample of 60 points drawn from a joint probability distribution over these variables. The remaining figures show histogram estimates of the marginal distributions p(X) and p(Y ), as well as the conditional distribution p(X|Y = 1) corresponding to the bottom row in the top left figure.

Thursday, October 20, 2022

Monday, September 05, 2022

Saturday, August 27, 2022

Monday, July 25, 2022

Saturday, July 16, 2022

A good scientific talk is a good scientific story

A typical plot diagram for an action movie can be similar to a the narrative structure of a scientific presentation. Just as a protagonist overcomes obstacles through a series of action scenes, a scientist pursues scientific objectives through a series of experiments to reach a goal. As a scientist comes closer and closer to reaching a goal, curiosity comes to a maximal point, the initial scientific question is answered, and the presentation reaches a sense of resolution.

Thursday, July 07, 2022

Top non-technical nuggets of wisdom of code

- Your code should work first; you can worry about optimization later.

- Your code needs to run on a machine, but it also needs to be read by a human.

- Keep away from boxes; you’re a developer.

- Write unit tests for your code!

- Knowledge is everywhere; learn how to tap into it and make the most of it.

- Side projects are fantastic and should not be a scary thing to tackle.

- Avoid companies that are like family.

- Unlimited time off can be a trap, but it can also be a wonderful perk; make sure you ask the right questions.

- Trivial perks that only adorn the job offering, such as free food and chill-out zones should be ignored.

- Flexible hours, paid parental leave, company gear, and other useful perks are what you should look for as part of a job offer.

- Plan first, code later. That way you have a blueprint to base your work on.

- Everyone in your team is as important as you, even those who don’t write code.

- Your ego can be the end of your career; you have to learn to keep it under control.

- Do not fear code reviews. They’re a perfect learning opportunity; learn to get the most out of them.

Sunday, June 26, 2022

Attention Rebellion

- Cause One: The Increase in Speed, Switching, and Filtering

- Cause Two: The Crippling of Our Flow States

- Cause Three: The Rise of Physical and Mental Exhaustion

- Cause Four: The Collapse of Sustained Reading

- Cause Five: The Disruption of Mind-Wandering

- Cause Six: The Rise of Technology That Can Track and Manipulate You

- Cause Seven: The Rise of Cruel Optimism

- Cause Eight: The Surge in Stress and How It Is Triggering Vigilance

- Causes Nine and Ten: Our Deteriorating Diets and Rising Pollution

- Cause Eleven: The Rise of ADHD and How We Are Responding to It

- Cause Twelve: The Confinement of Our Children, Both Physically and Psychologically

Friday, June 24, 2022

Complex Is Better Than Complicated

The Zen of Python—a collection of principles that summarize the core philosophy of the language—has crystal-clear points like these:

- Simple is better than complex.

- Complex is better than complicated.

- Flat is better than nested.

- Readability counts.

If the implementation is easy to explain, it may be a good idea.

DATA INTEGRITY

Data integrity refers to the physical characteristics of collected data that determine the reliability of the information. Data integrity is based on parameters such as completeness, uniqueness, timeliness, accuracy, and consistency.

Completeness

Data completeness refers to collecting all items necessary to the full description of the states of a considered object or process. A data item is considered complete if its digital description contains all attributes that are strictly required for human or machine comprehension. In other words, it may be acceptable to have missing pieces in the expected records (i.e., no contact information) as long as the remaining data is comprehensive enough for the domain.

For example, when a sensor (e.g., an IoT sensor) is involved, you might want it to sample data at a frequency of 10 minutes or even less if required or appropriate for the scenario. At the same time, you might want to be sure that the timeline is continuous with no gaps in between. If you plan to use that data to predict possible hardware failures, then you need be sure you can keep an eye close enough to the target event and not miss anything along the way.

Completeness results from having no gaps in the data from what was supposed to be collected and what is actually collected. In automatic data collection (i.e., IoT sensors), this aspect is also related to physical connectivity and data availability.

Uniqueness

When large chunks of data are collected and sampled for further use, there’s the concrete risk that some data items are duplicated. Depending on the business requirements, duplicates may or may not be an issue. Poor data uniqueness is an issue if, for example, it could lead to skewed results and inaccuracies.

Uniqueness is fairly easy to define mathematically. It is 100 percent if there are no duplicates. The definition of duplicates, however, depends on the context. For example, two records about Joseph Doe and Joe Doe are apparently unique but may refer to the same individual and then be duplicates that must be cleaned.

Timeliness

Data timeliness refers to the distribution of data records within an acceptable time frame. The definition of an acceptable time frame is also context-specific. It refers to the duration of the time frame and the appropriate timeline.

In predictive maintenance, for example, the timeline varies depending on the industry. Usually, a 10-minute timeline is more than acceptable but not for reliable fault predictions in wind turbines. In this case, a 5-minute interval is debated, and some experts suggest an even shorter rate of data collection.

Duration is the overall time interval for which data collection should occur to ensure reliable analysis of data and satisfactory results. In predictive maintenance, an acceptable duration is on the order of two years’ worth of data.

Accuracy

Data accuracy measures the degree to which the record correctly describes the observed real-world item. Accuracy is primarily about the correctness of the data acquired. The business requirements set the specifications of what would be a valid range of values for any expected data item.

When inaccuracies are detected, some policies should be applied to minimize the impact on decisions. Common practices are to replace out-of-range values with a default value or with the arithmetic mean of values detected in a realistic interval.

Consistency

Data consistency measures the difference between the values reported by data items that represent the same object. An example of inconsistency is a negative value of output when no other value reports failures of any kind. Definitions of data consistency are, however, also highly influenced by business requirements.

Sunday, June 19, 2022

Classifying Objects

The classification problem is about identifying the category an object belongs to. In this context, an object is a data item and is fully represented by an array of values (known as features). Each value refers to a measurable property that makes sense to consider in the scenario under analysis. It is key to note that classification can predict values only in a discrete, categorical set.

Variations of the Problem

The actual rules that govern the object-to-category mapping process lead to slightly different variations of the classification problem and subsequently different implementation tasks.

Binary Classification. The algorithm has to assign the processed object to one of only two possible categories. An example is deciding whether, based on a battery of tests for a particular disease, a patient should be placed in the “disease” or “no-disease” group.

Multiclass Classification. The algorithm has to assign the processed object to one of many possible categories. Each object can be assigned to one and only one category. For example, classifying the competency of a candidate, it can be any of poor/sufficient/good/great but not any two at the same time.

Multilabel Classification. The algorithm is expected to provide an array of categories (or labels) that the object belongs to. An example is how to classify a blog post. It can be about sports, technology, and perhaps politics at the same time.

Anomaly Detection. The algorithm aims to spot objects in the dataset whose property values are significantly different from the values of the majority of other objects. Those anomalies are also often referred to as outliers.

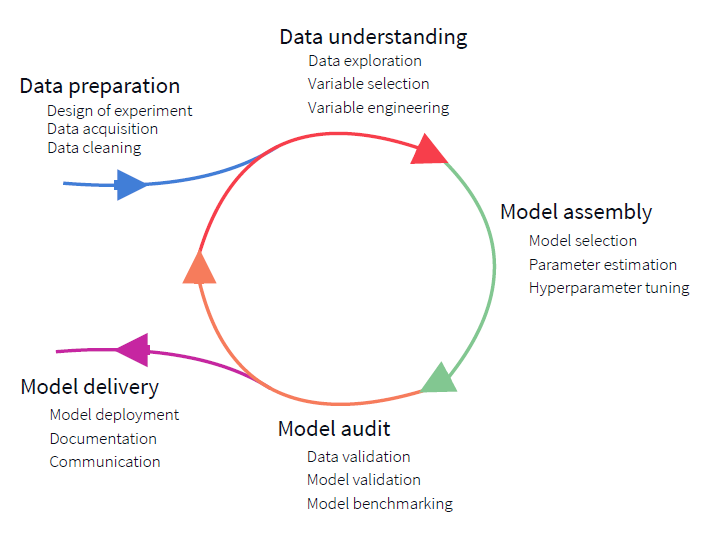

The Dev DataOps Agile cycle

For data science and development teams to work together (and along with domain experts), it is necessary that skills tend to merge: data scientists learning about programming aspects and user experience and, more importantly, developers learning about the intricacies and internal mechanics of machine learning.

Saturday, June 11, 2022

Algorithms are presented in seven groups or kingdoms distilled from the broader fields of study

- Stochastic Algorithms that focus on the introduction of randomness into heuristic methods.

- Evolutionary Algorithms inspired by evolution by means of natural selection.

- Physical Algorithms inspired by physical and social systems.

- Probabilistic Algorithms that focus on methods that build models and estimate distributions in search domains.

- Swarm Algorithms that focus on methods that exploit the properties of collective intelligence.

- Immune Algorithms inspired by the adaptive immune system of vertebrates.

- Neural Algorithms inspired by the plasticity and learning qualities of the human nervous system.

Sunday, June 05, 2022

Sunday, April 24, 2022

Sunday, April 17, 2022

A Pale Blue Dot

Sunday, April 10, 2022

* as a Service

SAAS - Software as a Service

PAAS - Plataform as a Service

IAAS - Infrastructure as a Service

CAAS - Containers as a Service

FAAS - Function as a Service

Wednesday, April 06, 2022

Tuesday, March 22, 2022

Saturday, March 19, 2022

Friday, March 18, 2022

Wednesday, March 09, 2022

Sunday, February 20, 2022

Sunday, February 13, 2022

Friday, January 07, 2022

Learning Rate

Arguably the most important hyperparameter, the learning rate, roughly speaking, controls how fast your neural net “learns”. So why don’t we just amp this up and live life on the fast lane?

Not that simple. Remember, in deep learning, our goal is to minimize a loss function. If the learning rate is too high, our loss will start jumping all over the place and never converge.

And if the learning rate is too small, the model will take way too long to converge, as illustrated above.

Monday, January 03, 2022

Saturday, January 01, 2022

Understanding the Consistency Models

Consistency defines the rules under which distributed data is available to users. What this means is that when new data is available (i.e., new, or updated data) in a distributed database, the consistency model determines when the data is available to users for reads. Besides strong and eventual, there are three additional consistency models. These are the bounded staleness, session, and consistent prefix.

Strong

Consistency Model

Guarantees that any read of an item (such as a customer record) will return the most recent version of such item.

Eventual

Consistency Model

When using the eventual consistency model, it is guaranteed that all the replicas will eventually converge to reflect the most recent write.

Bounded

Staleness Consistency Model

With bounded staleness, reads may lag writes by at most K operations or a t time interval. For an account with only one region, K must be between 10 and 1,000,000 operations, and between 100,000 and 1,000,000 operations if the account is globally distributed.

Session

Consistency Model

The session consistency model is named so because the consistency level is scoped at the client session. What this means is that any reads or writes are always current within the same session, and they are monotonic.

Consistent

Prefix Consistency Model

The last consistency model is consistent prefix. This model is like the eventual consistency model; however, it guarantees that reads never see out-of-order writes.

Consistency

for Queries

By default,

any user-defined resource would have the same consistency level for queries as

was defined for reads.